A bit of noise

“bits are bits” is an opinion often expressed on the audio forums.

As long as the bits are right, everything is right.

Bits are bits is an undeniable truth, nothing wrong with A=A, logically consistent.

According to information theory, A=A is a statement with a high predictive value; in fact 100% as it is always true. Likewise it is a statement with a low informational value, in fact zero because A=A we know already.

If we talk PCM audio, it consist in theory of two components, samples (the bits) and time step (the sample rate).

Obvious if we leave out the time step (that is the implication of the bits are bits approach), we leave the half of what makes PCM out of the equation.

Leaving half of a phenomenon out of the equation will never yield valid conclusions.

Then there is practice, as digital is done with analog electrons using analog components, there is always some dirt, some distortion.

If an analog signal is send, the signal will become distorted, the longer the distance the more so.

The same applies to a digital signal as it is analog as well, the block pulse will become distorted, the longer the distance the more so.

The big difference is that in case of analog, we can do nothing about this distortion. No way the receiver “knows” what has been send. It has to accept the signal as is.

A digital receiver knows what has been send; a block pulse. As long as it can reconstruct the rise and the fall of the signal, it can reconstruct the original signal.

Indeed the bits are the most robust part of digital audio.

Bit errors are rare and if they happen we run for the volume control to save our speakers.

Unless some DSP is going somewhere, our bits are faithfully send form sender to receiver.

If our audio path is bit perfect, differences in sound quality are not because of the bits.

When in doubt, record the bits in the digital domain and compare with the original file.

In general these test yields the same results, what is send by the source is exactly what is recorded at the receiver.

All audiophile media players produces exactly the same output bit wise. Bits are bits and bit perfect audio is bit perfect audio.

Unfortunately we don’t listen to digital audio. We don’t listen to bits but to a 100% analog signal.

At some stage we have to leave the digital domain and convert our rock solid bits into an analog signal, indeed we need a DAC, a Digital to Analog Converter.

Each sample should be processed with exactly the same time step.

This is a matter of a clock, indeed an analog device with analog imperfection.

Our bits have the digital robustness, our clock will never have.

I must admit that today they do make clocks that are astoundingly accurate.

The deviations in the time step are measured in femto seconds.

1 femto is 0.000 000 000 000 001 or one thousand billionth of a second.

Al electronic components produce some “dirt”, some noise.

It can be RFI, EMI, a ripple on the ground plane.

If this creeps into the DAC, it might disturb the audio.

E.g. if the ground plane is modulated and this ground is the ground of the analog out, the output gets modulated.

That’s why isolating the DAC from the noise is important.

Evidence

To formulate what possibly might affect sound quality is not too difficult.

Bits can be in error, sample rate will fluctuate (jitter) and there will always be some noise.

The problem is to find evidence.

If different media players generate different amount of ground plane noise, I would like to see some measurements proving this.

Audiophile optimizers by the number.

All of them claim to improve sound quality by tweeking system parameters.

None of them provides any measurements.

If all these tweaks lowers the noise floor it should be easy to measure.

Measurements

There is a lot of talk on the Internet suggesting that a switching PSU is disastrous sound quality wise. A simple test I conjectured up is to use a laptop as a transport and disconnect it from the PSU. It will switch to the battery hence pure DC.

This should yield a measurable improvement.

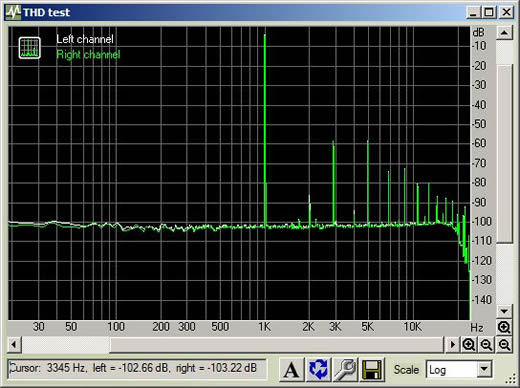

I did an analog loop back recording (sound card out in to sound card in) with RMAA with the PSU in place and without.

This is really horrible. Switching to battery power do gives you a clean DC source but at the same time the power saving kicks in generating a lot of distortion.

This is perhaps typical for badly designed onboard audio.

What is going on inside the PC modulates the analog out.

Simply a bad design.

One can imagine when using an outboard DAC using asynchronous USB combined with galvanic isolation the results might be completely different.

This is one of the reasons why it is hard to say if a tweak will work or not.

Our systems differ substantially.

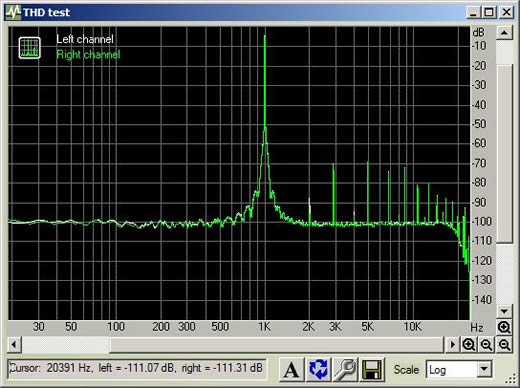

Cambridge Audio published jitter measurements demonstrating the impact of the PSU of a PC on the jitter performance.

Their results are exactly the opposite of mine.

Here using the PSU does the damage and using the battery yields the best results.

Obvious they have done nothing to shield the USB receiver of the DAC Magic from the noise.

A bad design imho.

More on USB isolation can be found here

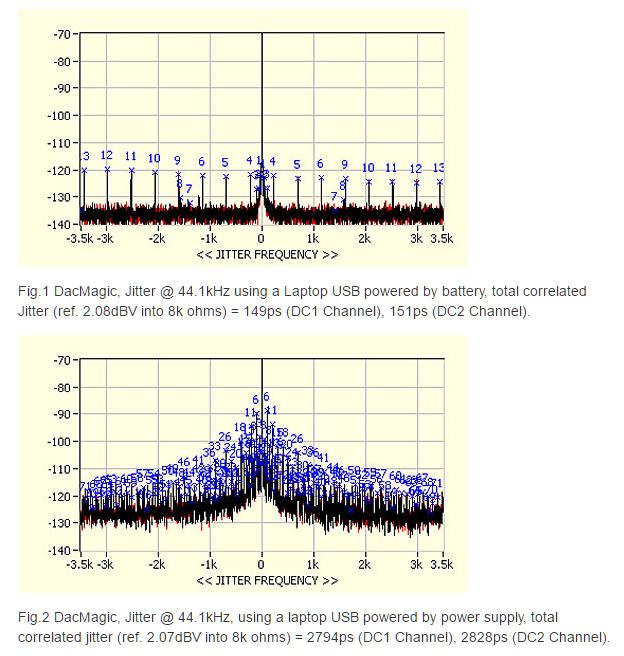

Archimago [1] did some interesting tests.

Do a jitter test and repeat it with the CPU and the GPU running at 100%

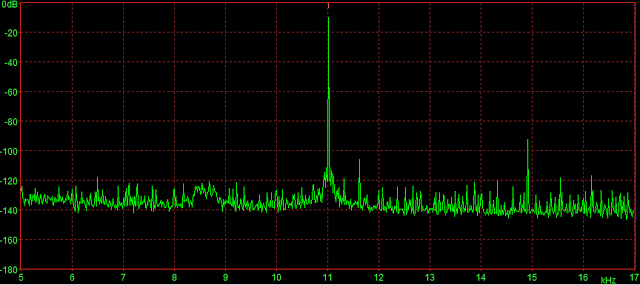

Adaptive mode USB (system load not specified)

Adaptive mode USB 100% CPU/GPU

Some additional noise is showing up between 8-9kHz.

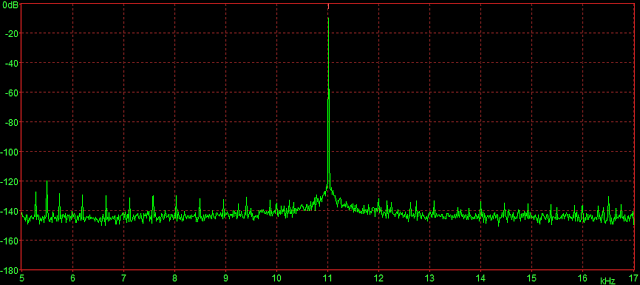

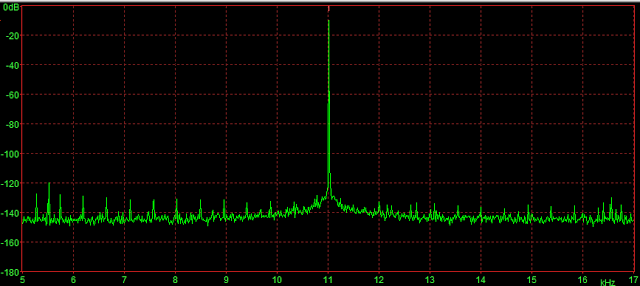

Asynchronous mode USB (system load not specified)

Asynchronous mode USB with 100% CPU/GPU

Almost impossible to spot a difference.

Summary

Obvious “bits are bits” is an oversimplification.

The samples are the only part of our digital audio that Is truly digital.

The sample rate is generated by a clock, a analog device with analog imperfection.

Processors and certainly a PC full of all kind of components is a source of noise indeed.

The question is how well our DAC is isolated from the source.

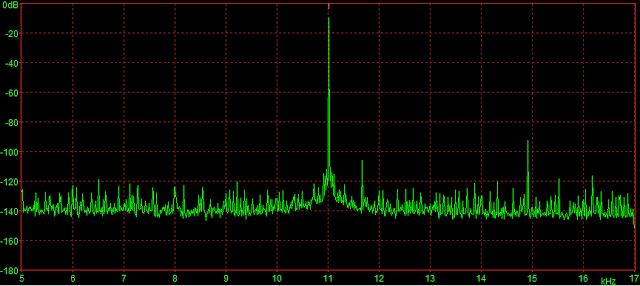

If one looks at measurements of modern DAC’s using the USB input one in general hardly see any disturbance at all.

No sign of noise, no sign of jitter. A noise floor as low as -120 dBFS seems common in $1000 DAC’s.

Makes one wonder if we do have any issue at all.

- MEASUREMENTS: Adaptive AUNE X1, Asynchronous "Breeze Audio" CM6631A USB, and Jitter - Archimago's Musings

- Cambridge Audio Azur DacMagic D/A converter Manufacturer's Comments -Stereophile